What is the distribution of $\mathrm{tr}(AA'BB')$ where $A$ and $B$ are two random matrices of $d \times k$ size with orthonormal columns?

Maybe the expected value is easier to compute? A fallback solution would be to use a simulation. What would be the most effective scheme? Typical values for $d$ would be around 2000, while $k$ ranges from ~10 to a few hundreds.

Below is a more detailed account of my problem and its context, how I ended up to ask this question and what I tried.

Context

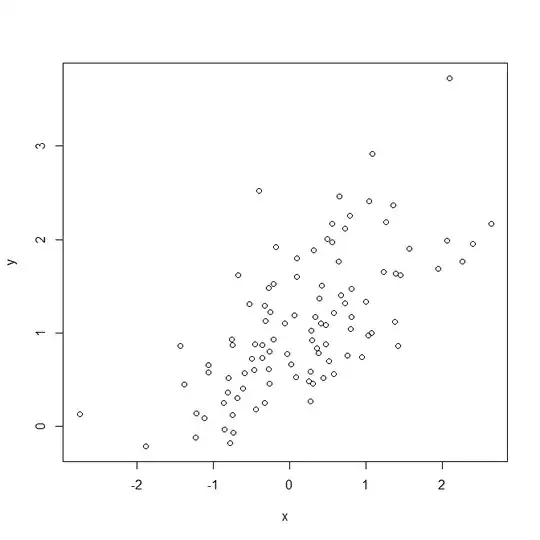

I want to check if the principal components computed from a sample of stochastic process have converged. My current ideas involve comparing the subspaces spanned by the first $k$ principal components for given values of interest of $k$ either for several realisation of the stochastic process or for bootstraped principal components. My criterion for subspace similarity is $\mathrm{tr}(AA'BB') / k$ where $A$ and $B$ are matrices whose $k$ columns are bases of the two subspaces to compare. This criterion is easy to compute and behaves well except for the following property: as the dimension of the subspaces comes closer to the total dimension, the remaining angular space in which to point «wrong» directions shrinks. In order to build a more meaningful criterion, I thought to compare this score to the score obtained by comparing two random subspaces of dimension $k$.

My attempt

My first attempt at this was to consider that without loss of generality the first random subspace could have as basis the first $k$ vectors of the canonical basis.

A basis for the other random subspace can be built by first picking vectors from the canonical basis without replacement.

The resulting distribution would is simply those of an hypergeomtric law with parameter corresponding to $k$ draws in a total pool of $d$ vectors among which $k$ give a positive outcome (the $k$ first vectors of the canonical basis), where $d$ is the dimension of the total space, $\mathrm{H}(d, k, k/d)$.

Now, there is no reason that the vectors of the two bases should be either aligned or orthogonal. I suppose it is possible to remedy this by applying a random rotation $R$ and look at $\mathrm{tr}(AA'RBB'R')$. I am not sure how a rotation in $\mathbb{R}^d$ behaves but maybe using properties of the trace and the fact that $R' = R^{-1}$ it is possible to sort this out?

Note: Random orthogonal projectors are distributed according to the Wishart distribution. I do not know more about this however.

Related references:

Absil, Edelman & Koev, “On the largest principal angle between random subspaces”, Linear Algebra and its Applications, 2006, doi:10.1016/j.laa.2005.10.004, (I did not read this one)

Björck & Golub, “Numerical Methods for Computing Angles Between Linear Subspaces”, Mathematics of Computation, 1973

Ipsen & Meyer, “The Angle Between Complementary Subspaces”, The American Mathematical Monthly, 1995

Johnstone, “Multivariate analysis and Jacobi ensembles: Largest eigenvalue, Tracy-Widom limits and rates of convergence”, Ann. Statist., 2008 (too esoteric for me)

Liquet & Saracco, “Application of the Bootstrap Approach to the Choice of Dimension and the $\alpha$ Parameter in the {SIR} $\alpha$ Method”, Communications in Statistics Simulation and Computation, 2008

Wang, Wang & Feng, “Subspace distance analysis with application to adaptive Bayesian algorithm for face recognition”, Pattern Recognition , 2006

Zuccon, Azzopardi & van Rijsbergen, “Semantic Spaces: Measuring the Distance between Different Subspaces”, 2009

- Hotelling, “Relations Between Two Sets of Variates”, Biometrika, 1936 (Possibly appropriate tests are given in paragraphs 11 and following in low dimension cases and extended in paragraph 15 to higher dimensions).

- Bao, Hu, Pan & Zhou, “Test of independence for high-dimensional random vectors based on freeness in block correlation matrices”, Electronic Journal of Statistics, 2017