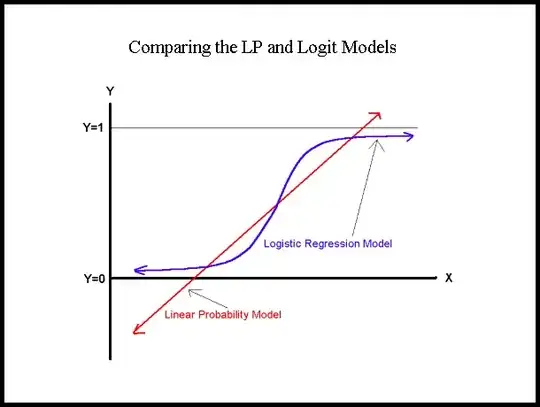

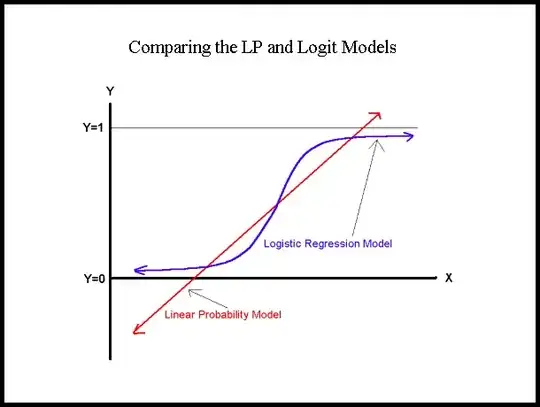

What you are proposing is a linear probability model, i.e. an OLS regression for a binary dependent variable. The difference is that logit is a non-linear model whereas the linear probability model (as the name says) is linear. The difference is perhaps best understood graphically.

If you calculate the marginal effect of your logistic regression coefficients at the mean you will likely get very similar estimates than those from the OLS regression (in the graph that would be where the blue and the red line intersect or it will be at least close to it). The picture also shows nicely the problems of OLS in this case because you can see that it predicts outside the theoretical range, so it can give you predicted probabilities that are larger than one or smaller than zero. There are other advantages and disadvantages of either model (see for example these lecture notes for a summary).

In this sense there is nothing "wrong" with your approach. It just really depends on what you want to do with your model. If you are interested in estimating the marginal effect of your explanatory variables on the outcome probability then either is fine. If you want to do predictions then the linear probability model is not a good choice given that it's predicted probabilities are not bound to lie between zero and one.