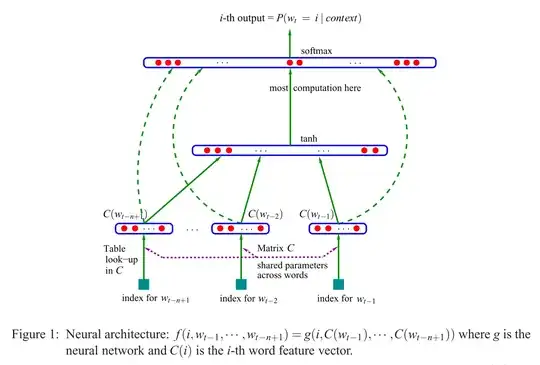

As I was browsing around regarding CBOW issues and stumbled upon this, here is an alternative answer to your (first) question ("What is a projection layer vs. matrix?"), by looking at the NNLM model (Bengio et al., 2003):

If comparing this to Mikolov's model[s] (shown in an alternative answer to this question), the cited sentence (in the question) means that Mikolov removed the (non-linear!) $tanh$ layer seen in Bengio's model shown above. And Mikolov's first (and only) hidden layer, instead of having individual vectors $C(w_i)$ for each word, only uses one vector that sums up the "word parameters", and then those sums get averaged. So this explains the last question ("What does it mean that the vectors are averaged?"). The words are "projected into the same position" because the weights assigned to the individual input words are summed up and averaged in Mikolov's model. Therefore, his projection layer looses all positional information, unlike Bengio's first hidden layer (aka. the projection matrix) - thereby answering the second question ("What does it mean that all words get projected into the same position?"). So Mikolov's model[s] retained the "word parameters" (the input weight matrix), removed the projection matrix $C$ and the $tanh$ layer, and replaced both with a "simple" projection layer.

To add, and "just for the record": The real exciting part is Mikolov's approach to solving the part where in Bengio's image you see the phrase "most computation here". Bengio tried to lessen that problem by doing something that is called hierarchical softmax (instead of just using the softmax) in a later paper (Morin & Bengio 2005). But Mikolov, with his strategy of negative subsampling took this a step further: He doesn't compute the negative log-likelihood of all "wrong" words (or Huffman codings, as Bengio suggested in 2005) at all, and just computes a very small sample of negative cases, which, given enough such computations and a clever probability distribution, works extremely well. And the second and even more major contribution, naturally, is that the whole thing about his additive "compositionality" ("man + king = woman + ?" with answer queen), which only really works well with his Skip-Gram model, and can be roughly understood as taking Bengio's model, applying the changes Mikolov suggested (i.e., the phrase cited in your question), and then inverting the whole process. That is, guessing the surrounding words from the output words (now used as input), $P(context | w_t = i)$, instead.