It's not clear how much intuition a reader of this question might have about convergence of anything, let alone of random variables, so I will write as if the answer is "very little". Something that might help: rather than thinking "how can a random variable converge", ask how a sequence of random variables can converge. In other words, it's not just a single variable, but an (infinitely long!) list of variables, and ones later in the list are getting closer and closer to ... something. Perhaps a single number, perhaps an entire distribution. To develop an intuition, we need to work out what "closer and closer" means. The reason there are so many modes of convergence for random variables is that there are several types of "closeness" I might measure.

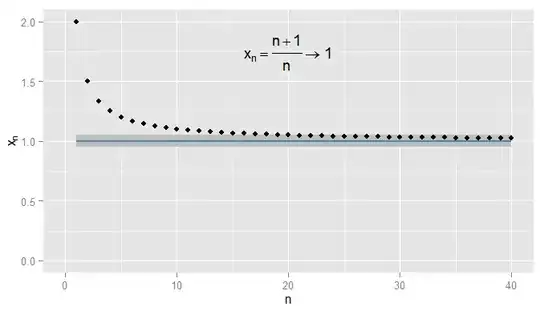

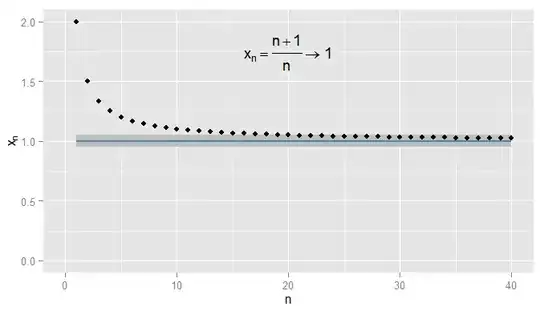

First let's recap convergence of sequences of real numbers. In $\mathbb{R}$ we can use Euclidean distance $|x-y|$ to measure how close $x$ is to $y$. Consider $x_n = \frac{n+1}{n} = 1 + \frac{1}{n}$. Then the sequence $x_1, \, x_2, \, x_3, \dots$ starts $2, \frac{3}{2}, \frac{4}{3}, \frac{5}{4}, \frac{6}{5}, \dots$ and I claim that $x_n$ converges to $1$. Clearly $x_n$ is getting closer to $1$, but it's also true that $x_n$ is getting closer to $0.9$. For instance, from the third term onwards, the terms in the sequence are a distance of $0.5$ or less from $0.9$. What matters is that they are getting arbitrarily close to $1$, but not to $0.9$. No terms in the sequence ever come within $0.05$ of $0.9$, let alone stay that close for subsequent terms. In contrast $x_{20}=1.05$ so is $0.05$ from $1$, and all subsequent terms are within $0.05$ of $1$, as shown below.

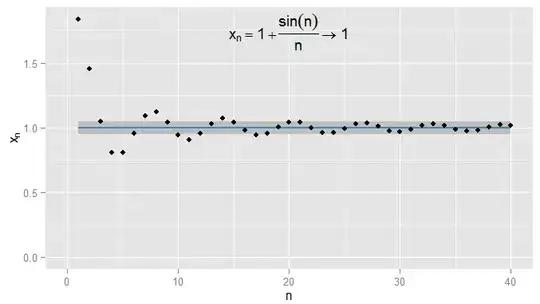

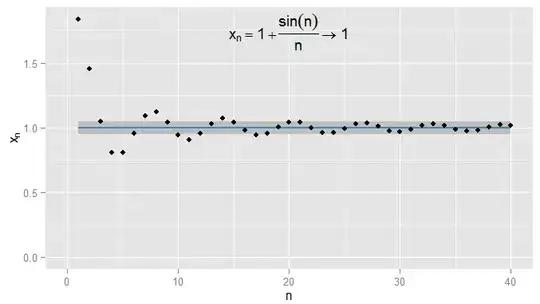

I could be stricter and demand terms get and stay within $0.001$ of $1$, and in this example I find this is true for the terms $N=1000$ and onwards. Moreover I could choose any fixed threshold of closeness $\epsilon$, no matter how strict (except for $\epsilon = 0$, i.e. the term actually being $1$), and eventually the condition $|x_n - x| \lt \epsilon$ will be satisfied for all terms beyond a certain term (symbolically: for $n \gt N$, where the value of $N$ depends on how strict an $\epsilon$ I chose). For more sophisticated examples, note that I'm not necessarily interested in the first time that the condition is met - the next term might not obey the condition, and that's fine, so long as I can find a term further along the sequence for which the condition is met and stays met for all later terms. I illustrate this for $x_n = 1 + \frac{\sin(n)}{n}$, which also converges to $1$, with $\epsilon=0.05$ shaded again.

Now consider $X \sim U(0,1)$ and the sequence of random variables $X_n = \left(1 + \frac{1}{n}\right) X$. This is a sequence of RVs with $X_1 = 2X$, $X_2 = \frac{3}{2} X$, $X_3 = \frac{4}{3} X$ and so on. In what senses can we say this is getting closer to $X$ itself?

Since $X_n$ and $X$ are distributions, not just single numbers, the condition $|X_n - X| \lt \epsilon$ is now an event: even for a fixed $n$ and $\epsilon$ this might or might not occur. Considering the probability of it being met gives rise to convergence in probability. For $X_n \overset{p}{\to} X$ we want the complementary probability $P(|X_n - X| \ge \epsilon)$ - intuitively, the probability that $X_n$ is somewhat different (by at least $\epsilon$) to $X$ - to become arbitrarily small, for sufficiently large $n$. For a fixed $\epsilon$ this gives rise to a whole sequence of probabilities, $P(|X_1 - X| \ge \epsilon)$, $P(|X_2 - X| \ge \epsilon)$, $P(|X_3 - X| \ge \epsilon)$, $\dots$ and if this sequence of probabilities converges to zero (as happens in our example) then we say $X_n$ converges in probability to $X$. Note that probability limits are often constants: for instance in regressions in econometrics, we see $\text{plim}(\hat \beta) = \beta$ as we increase the sample size $n$. But here $\text{plim}(X_n) = X \sim U(0,1)$. Effectively, convergence in probability means that it's unlikely that $X_n$ and $X$ will differ by much on a particular realisation - and I can make the probability of $X_n$ and $X$ being further than $\epsilon$ apart as small as I like, so long as I pick a sufficiently large $n$.

A different sense in which $X_n$ becomes closer to $X$ is that their distributions look more and more alike. I can measure this by comparing their CDFs. In particular, pick some $x$ at which $F_X(x) = P(X \leq x)$ is continuous (in our example $X \sim U(0,1)$ so its CDF is continuous everywhere and any $x$ will do) and evaluate the CDFs of the sequence of $X_n$s there. This produces another sequence of probabilities, $P(X_1 \leq x)$, $P(X_2 \leq x)$, $P(X_3 \leq x)$, $\dots$ and this sequence converges to $P(X \leq x)$. The CDFs evaluated at $x$ for each of the $X_n$ become arbitrarily close to the CDF of $X$ evaluated at $x$. If this result holds true regardless of which $x$ we picked, then $X_n$ converges to $X$ in distribution. It turns out this happens here, and we should not be surprised since convergence in probability to $X$ implies convergence in distribution to $X$. Note that it can't be the case that $X_n$ converges in probability to a particular non-degenerate distribution, but converges in distribution to a constant. (Which was possibly the point of confusion in the original question? But note a clarification later.)

For a different example, let $Y_n \sim U(1, \frac{n+1}{n})$. We now have a sequence of RVs, $Y_1 \sim U(1,2)$, $Y_2 \sim U(1,\frac{3}{2})$, $Y_3 \sim U(1,\frac{4}{3})$, $\dots$ and it is clear that the probability distribution is degenerating to a spike at $y=1$. Now consider the degenerate distribution $Y=1$, by which I mean $P(Y=1)=1$. It is easy to see that for any $\epsilon \gt 0$, the sequence $P(|Y_n - Y| \ge \epsilon)$ converges to zero so that $Y_n$ converges to $Y$ in probability. As a consequence, $Y_n$ must also converge to $Y$ in distribution, which we can confirm by considering the CDFs. Since the CDF $F_Y(y)$ of $Y$ is discontinuous at $y=1$ we need not consider the CDFs evaluated at that value, but for the CDFs evaluated at any other $y$ we can see that the sequence $P(Y_1 \leq y)$, $P(Y_2 \leq y)$, $P(Y_3 \leq y)$, $\dots$ converges to $P(Y \leq y)$ which is zero for $y \lt 1$ and one for $y \gt 1$. This time, because the sequence of RVs converged in probability to a constant, it converged in distribution to a constant also.

Some final clarifications:

- Although convergence in probability implies convergence in distribution, the converse is false in general. Just because two variables have the same distribution, doesn't mean they have to be likely to be to close to each other. For a trivial example, take $X\sim\text{Bernouilli}(0.5)$ and $Y=1-X$. Then $X$ and $Y$ both have exactly the same distribution (a 50% chance each of being zero or one) and the sequence $X_n=X$ i.e. the sequence going $X,X,X,X,\dots$ trivially converges in distribution to $Y$ (the CDF at any position in the sequence is the same as the CDF of $Y$). But $Y$ and $X$ are always one apart, so $P(|X_n - Y| \ge 0.5)=1$ so does not tend to zero, so $X_n$ does not converge to $Y$ in probability. However, if there is convergence in distribution to a constant, then that implies convergence in probability to that constant (intuitively, further in the sequence it will become unlikely to be far from that constant).

- As my examples make clear, convergence in probability can be to a constant but doesn't have to be; convergence in distribution might also be to a constant. It isn't possible to converge in probability to a constant but converge in distribution to a particular non-degenerate distribution, or vice versa.

- Is it possible you've seen an example where, for instance, you were told a sequence $X_n$ converged another sequence $Y_n$? You may not have realised it was a sequence, but the give-away would be if it was a distribution that also depended on $n$. It might be that both sequences converge to a constant (i.e. degenerate distribution). Your question suggests you're wondering how a particular sequence of RVs could converge both to a constant and to a distribution; I wonder if this is the scenario you're describing.

- My current explanation is not very "intuitive" - I was intending to make the intuition graphical, but haven't had time to add the graphs for the RVs yet.