(with apologizes to this question).

Consider two distributions $G$, $F$ both uni-modal and absolutely continuous, square integrable and satisfying:

$$F<_c G$$

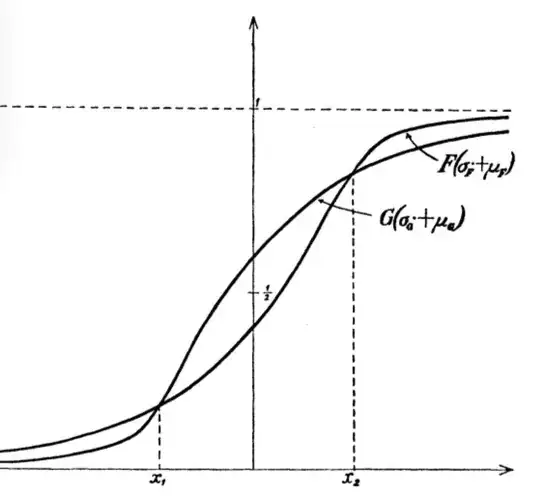

this means that the standardized distributions $F(x\sigma_F+\mu_F)$ and $G(x\sigma_G+\mu_G)$ cross each other exactly twice and that in the middle section we have that $G(x\sigma_G+\mu_G)>F(x\sigma_F+\mu_F)$, as in the example below (from [0]):

In practice, $F<_c G$ is a convenient way to say that $G$ is more right skewed than $F$.

Now, can we say that:

$$F<_c G\implies \sigma_G^2\geq \sigma_F^2$$

? I couldn't find a proof of this,

From wiki:

If the random variable $X$ is continuous with probability density function $f(x)$, then the variance is given by

$$\sigma^2_F =\int (x-\mu)^2 \, f(x) \, dx\, =\int x^2 \, f(x) \, > dx\, - \mu^2$$

where $\mu_F$ is the expected value, $$\mu_F = \int x \, f(x) \, dx\, $$

- [0]Hannu Oja (1981). On Location, Scale, Skewness and Kurtosis of Univariate Distributions. Scandinavian Journal of Statistics, Vol. 8, No. 3 (1981), pp. 154-168