From what I have read:

A Distant supervision algorithm usually has the following steps:

1] It may have some labeled training data

2] It "has" access to a pool of unlabeled data

3] It has an operator that allows it to sample from this unlabeled

data and label them and this operator is expected to be noisy in its labels

4] The algorithm then collectively utilizes the original labeled training data

if it had and this new noisily labeled data to give the final output.

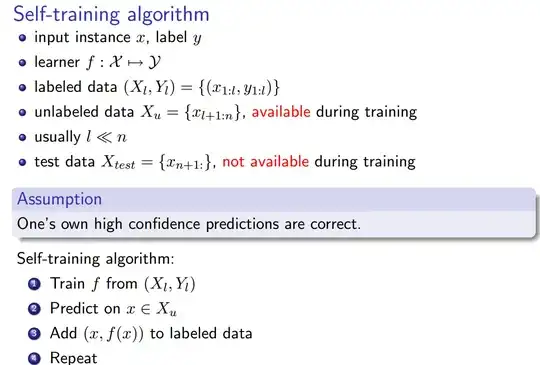

Self-learning (Yates, Alexander, et al. "Textrunner: open information extraction on the web." Proceedings of Human Language Technologies: The Annual Conference of the North American Chapter of the Association for Computational Linguistics: Demonstrations. Association for Computational Linguistics, 2007.):

The Learner operates in two steps. First, it automatically labels its own training data as positive or negative. Second, it uses this labeled data to train a Naive Bayes classifier.

Weak Supervision (Hoffmann, Raphael, et al. "Knowledge-based weak supervision for information extraction of overlapping relations." Proceedings of the 49th Annual Meeting of the Association for Computational Linguistics: Human Language Technologies-Volume 1. Association for Computational Linguistics, 2011.):

A more promising approach, often called “weak” or “distant” supervision, creates its own training data by heuristically matching the contents of a database to corresponding text.

It all sounds the same to me, with the exception that self-training seems to be slightly different in that the labeling heuristic is the trained classifier, and there is a loop between the labeling phase and the classifier training phase. However, Yao, Limin, Sebastian Riedel, and Andrew McCallum. "Collective cross-document relation extraction without labelled data." Proceedings of the 2010 Conference on Empirical Methods in Natural Language Processing. Association for Computational Linguistics, 2010. claim that distant supervision == self training == weak supervision.

Also, are there other synonyms?