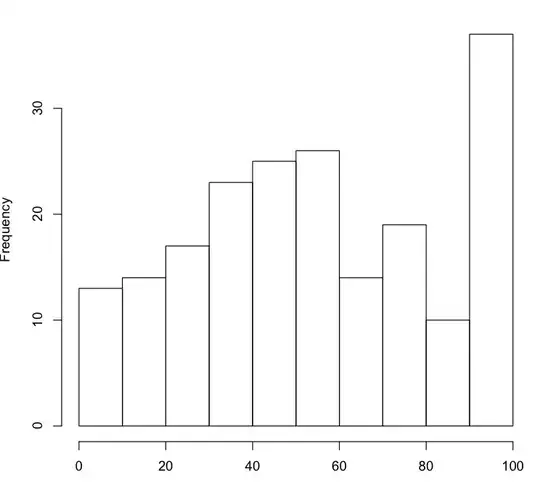

First a comment: "robust" usually refers to approaches guarding against outliers and violations of distributional assumptions. In your case, the problem is obviously a violation of distributional assumption, but it seems to depend on your DV (sorry for the pun).

What method to use depends on whether 100 is "truely" the highest possible value of your DV or if your DV measures an unobserved variable that has a latent distribution with possibly infinite values.

For illustration of the "latent variable" concept: On a cognitive test, you want to measure "intelligence", but you only observe if someone solves a question. So if some people solve all the questions, you do not know if these people all have the same intelligence or if there is still some variance in their intelligence scores.

If your DV is of the second kind, you could use tobit regression.

It's more difficult if your DV is really of the first kind, that is, if 100 is truely the highest score that could ever be measured.

And BTW, even with the "right" kind of approach you might still end up with a non-significant interaction.