Background: unbiased estimators of products of population moments

If you desire an UNBIASED estimator of a (product of moments), there are 3 varieties:

Polykays (a generalisation of k-statistics): these are unbiased estimators of products of population cumulants. The term polykay was coined by Tukey, but the concept goes back to Dressel (1940).

Polyaches (a generalisation of h-statistics): these are unbiased estimators of products of population central moments. i.e.

$$E\left[\text{h}_{\{r,t,\ldots ,v\}}\right] = {\mu }_r {\mu }_t \cdots {\mu }_v\text{$\, $}$$ ...... where ${\mu }_r$ denotes the $r^{th}$ central moment of the population.

- Polyraws: these are unbiased estimators of products of population raw moments. That is, you wish to find the $polyraw_{r, t, ...v}$ such that:

$$E\left[\text{polyraw}_{\{r,t,\ldots ,v\}}\right] = \acute{\mu }_r \acute{\mu }_t \cdots \acute{\mu }_v\text{$\, $}$$

...... where $\acute{\mu }_r$ denotes the $r^{th}$ raw moment of the population.

The Problem

We are given a random sample $(X_1, X_2, \dots, X_n)$ drawn on parent random variable $X$.

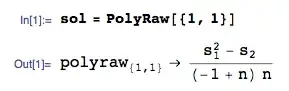

If we desire an unbiased estimator of: $(E[X])^2 = \acute{\mu }_1 \acute{\mu }_1$, then an unbiased estimator is the {1,1} polyraw:

where $s_r = \sum_{i=1}^n X_i^r$ denotes the $r^{th}$ power sum.

Comparison

Benjamin proposed the estimator: $\bar{X}^2 = (\frac{s_1}{n})^2$. This is not an unbiased estimator, since $E[(\frac{s_1}{n})^2]$ is just the $1^{st}$ RawMoment of $(\frac{s_1}{n})^2$:

which is not equal to $\acute{\mu }_1^2$.

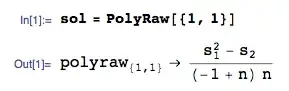

Let us check the polyraw solution:

... which is an unbiased estimator.

Plainly, unbiasedness is not everything, and we could equally calculate, for example, the MSE (mean-squared error) of each estimator using exactly the same tools.

[Update: Just had a quick play with this: in a simple test case of $X \sim N(0,\sigma^2)$, the polyraw unbiased estimator has smaller MSE than Ben's ML estimator, for all sample sizes $n$. That is, at least for the test case of Normality, the polyraw unbiased estimator dominates the maximum likelihood estimator, at all sample sizes. ]

Notes

PolyRaw, RawMomentToRaw etc are functions in the mathStatica package for Mathematica

I confess to the neologism polyache in our Springer book (2002) (and more recently, to polyraw in the latest edition).