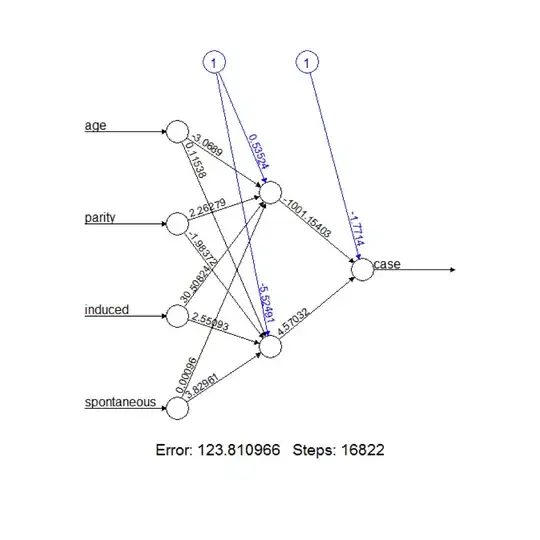

Neural networks are often treated as "black boxes" due to their complex structure. This is not ideal, as it is often beneficial to have an intuitive grasp of how a model is working internally. What are methods of visualizing how a trained neural network is working? Alternatively, how can we extract easily digestible descriptions of the network (e.g. this hidden node is primarily working with these inputs)?

I am primarily interested in two layer feed-forward networks, but would also like to hear solutions for deeper networks. The input data can either be visual or non-visual in nature.