After some searching, I find very little on the incorporation of observation weights/measurement errors into principal components analysis. What I do find tends to rely on iterative approaches to include weightings (e.g., here). My question is why is this approach necessary? Why can't we use the eigenvectors of the weighted covariance matrix?

-

2In addition to the answer(s) below, please see thread http://stats.stackexchange.com/q/141754/3277, where weighted PCA (with weights on columns and/or rows) is explained as primarily equivalent to weighted (generalized) svd/biplot. – ttnphns Oct 16 '16 at 09:35

2 Answers

It depends on what exactly your weights apply to.

Row weights

Let $\mathbf{X}$ be the data matrix with variables in columns and $n$ observations $\mathbf x_i$ in rows. If each observation has an associated weight $w_i$, then it is indeed straightforward to incorporate these weights into PCA.

First, one needs to compute the weighted mean $\boldsymbol \mu = \frac{1}{\sum w_i}\sum w_i \mathbf x_i$ and subtract it from the data in order to center it.

Then we compute the weighted covariance matrix $\frac{1}{\sum w_i}\mathbf X^\top \mathbf W \mathbf X$, where $\mathbf W = \operatorname{diag}(w_i)$ is the diagonal matrix of weights, and apply standard PCA to analyze it.

Cell weights

The paper by Tamuz et al., 2013, that you found, considers a more complicated case when different weights $w_{ij}$ are applied to each element of the data matrix. Then indeed there is no analytical solution and one has to use an iterative method. Note that, as acknowledged by the authors, they reinvented the wheel, as such general weights have certainly been considered before, e.g. in Gabriel and Zamir, 1979, Lower Rank Approximation of Matrices by Least Squares With Any Choice of Weights. This was also discussed here.

As an additional remark: if the weights $w_{ij}$ vary with both variables and observations, but are symmetric, so that $w_{ij}=w_{ji}$, then analytic solution is possible again, see Koren and Carmel, 2004, Robust Linear Dimensionality Reduction.

-

Thank you for the clarification. Can you explain why no analytic solution is possible with off-diagonal weights? I this is what I am missing from both Tamuz et al 2013 and Gabriel and Zamir 1979. – noname Aug 27 '14 at 21:00

-

@noname: I am not aware of such a proof, and moreover I would not be surprised if it were not known. It is generally quite tricky to prove that something is *not possible*, in particular that something is not possible analytically. The impossibility of angle trisection famously waited for its proof for over 2000 years... (cont.) – amoeba Aug 27 '14 at 21:44

-

3@noname: (cont.) What you are asking is to show that the problem of minimizing $\sum_{i,j} w_{ij}(X_{ij} - A_{ij})^2$ with respect to $A$ constrained to have low rank $q$. is not reducible to an eigenvector problem. I am afraid you would need another forum for that (maybe mathoverflow?). But note that finding eigenvectors is also not exactly an *analytical* solution: it's just that the [iterations](http://en.wikipedia.org/wiki/Power_iteration) are usually silently performed by a standard library function. – amoeba Aug 27 '14 at 21:44

-

2+1. The first section of the answer can be conceptualized also in terms of Weighted (Generalized) Biplot as described [here](http://stats.stackexchange.com/a/141755/3277). Keeping in mind how PCA is a "specific case of" Biplot (also concerned in the lined answer). – ttnphns Oct 06 '15 at 10:07

-

@ttnphns: After your comment and another thread being closed as a duplicate, I re-read my answer and expanded the explanation of how to deal with row weights. I think previously it was not completely correct or at least was not complete because I did not mention the centering with a weighted mean. I hope it makes more sense now! – amoeba Oct 06 '15 at 20:44

-

can I ask, why is X⊤WX divided by (n−1) instead of the sum of weights? In row method – Ján Яabčan Feb 06 '17 at 18:02

-

If X is a mxn matrix, using the formula above, the final weighted covariance matrix is nxn - is this correct? [(nxm)(mxm)(mxn)]. Shouldn't a final weighted covariance matrix be mxn and then run a normal PCA? – Zeus May 17 '18 at 05:35

-

@Zeus For a $n\times p$ data matrix, covariance matrix is always $p\times p$, weighted or not weighted. When I wrote "apply standard PCA" I meant "do the eigenvector decomposition etc." – amoeba May 17 '18 at 10:35

-

Thank you for the clarification. I am confused when reading this paper https://www.researchgate.net/publication/220869008_Point_cloud_denoising_using_robust_principal_component_analysis where they are square rooting the weights in the covariance matrix (section 4). Why the use of square roots of the weights? – wcochran Sep 27 '19 at 19:24

Thank you very much amoeba for the insight regarding row weights. I know that this is not stackoverflow, but I had some difficulties to find an implementation of row-weighted PCA with explanation and, since this is one of the first results when googling for weighted PCA, I thought it would be good to attach my solution, maybe it can help others in the same situation. In this Python2 code snippet, a PCA weighted with an RBF kernel as the one described above is used to calculate the tangents of a 2D dataset. I will be very happy to hear some feedback!

def weighted_pca_regression(x_vec, y_vec, weights):

"""

Given three real-valued vectors of same length, corresponding to the coordinates

and weight of a 2-dimensional dataset, this function outputs the angle in radians

of the line that aligns with the (weighted) average and main linear component of

the data. For that, first a weighted mean and covariance matrix are computed.

Then u,e,v=svd(cov) is performed, and u * f(x)=0 is solved.

"""

input_mat = np.stack([x_vec, y_vec])

weights_sum = weights.sum()

# Subtract (weighted) mean and compute (weighted) covariance matrix:

mean_x, mean_y = weights.dot(x_vec)/weights_sum, weights.dot(y_vec)/weights_sum

centered_x, centered_y = x_vec-mean_x, y_vec-mean_y

matrix_centered = np.stack([centered_x, centered_y])

weighted_cov = matrix_centered.dot(np.diag(weights).dot(matrix_centered.T)) / weights_sum

# We know that v rotates the data's main component onto the y=0 axis, and

# that u rotates it back. Solving u.dot([x,0])=[x*u[0,0], x*u[1,0]] gives

# f(x)=(u[1,0]/u[0,0])x as the reconstructed function.

u,e,v = np.linalg.svd(weighted_cov)

return np.arctan2(u[1,0], u[0,0]) # arctan more stable than dividing

# USAGE EXAMPLE:

# Define the kernel and make an ellipse to perform regression on:

rbf = lambda vec, stddev: np.exp(-0.5*np.power(vec/stddev, 2))

x_span = np.linspace(0, 2*np.pi, 31)+0.1

data_x = np.cos(x_span)[:-1]*20-1000

data_y = np.sin(x_span)[:-1]*10+5000

data_xy = np.stack([data_x, data_y])

stddev = 1 # a stddev of 1 in this context is highly local

for center in data_xy.T:

# weight the points based on their euclidean distance to the current center

euclidean_distances = np.linalg.norm(data_xy.T-center, axis=1)

weights = rbf(euclidean_distances, stddev)

# get the angle for the regression in radians

p_grad = weighted_pca_regression(data_x, data_y, weights)

# plot for illustration purposes

line_x = np.linspace(-5,5,10)

line_y = np.tan(p_grad)*line_x

plt.plot(line_x+center[0], line_y+center[1], c="r")

plt.scatter(*data_xy)

plt.show()

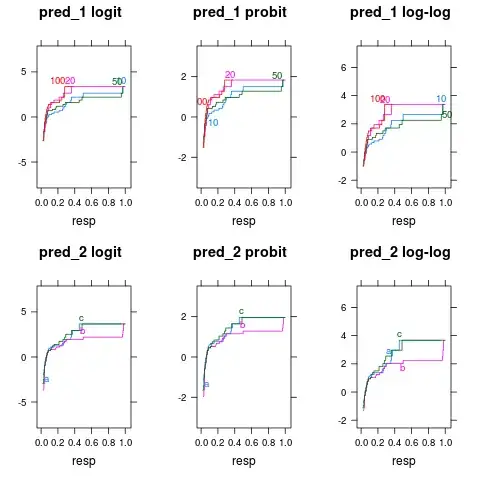

And a sample output (it does the same for every dot):

Cheers,

Andres

- 258

- 2

- 7

-

1Now, since some time has past: in a modern Python 3 setup one would probably use `@` instead of `.dot()`. Also, you should make this general for any kind of input matrix - in terms of number of features. Also, your function should not be called "regression" - as it is no regression. A regression's cost function relates to output error, while a PCA's cost function relates to error orthogonal to input. (This was not a rigorous discription. ;-)) So a PCA is basically a (linear) manifold learner instead of a regression. – Make42 Jan 23 '21 at 10:49

-

Thanks @Make42 for the feedback! Indeed Python 2 is now very outdated, and a PCA is not a regression (which in this example fails to fit the tangents close to the "vertical" asymptote). Hopefully there are modern libraries by now that take proper care of this, do you know any? otherwise feel free to edit (I can give it a go in a few weeks) – fr_andres Jan 23 '21 at 11:17

-

No, I am not aware of modern libraries for this which are standard (like scikit-learn). In fact I did not find any. I will just write my own code now :-/. I checked out how the PCA of scikit-learn is implemented. They are using `svd`, which in turn most likely used `eigh` of numpy or scipy. So, I will go with using `eigh`. I also need the inverse transformation. – Make42 Jan 23 '21 at 11:43

-

@Make42 I just did a quick search, what about this? https://scikit-learn.org/stable/modules/generated/sklearn.decomposition.KernelPCA.html it seems that gamma are the weights and rbf is there – fr_andres Jan 23 '21 at 12:13

-

1Regarding the inverse, naybe the fact that eigh yields u=v, orthogonal, helps (inv=transposed) – fr_andres Jan 23 '21 at 12:19

-

Not sure how does KernelPCA helps. I see that KernelPCA is sort of a linear PCA after a transformation into a higher dimensional space where the values of after the transformation are weighted by distance of the data points in the original space... but that is a very special case and there is weighting only implicitly somewhere in there. I have concrete weights per row and I only want a linear PCA with those weights in the original space. – Make42 Jan 23 '21 at 12:33

-

@Make42 I see. So maybe linear kernel and your weights as gamma works then? – fr_andres Jan 23 '21 at 12:48

-

Nvm, gamma is a scalar. Bummer! Then this implementation doesn't weight the rows, sorry for the noise – fr_andres Jan 23 '21 at 12:50

-

gamma is the "bandwidth" - or degree of smoothing - of the kernel. It is basically the weighting for the "distance", but then the individual weights (of the rows) are based on the distances (weighted by gamma - sort of). – Make42 Jan 23 '21 at 13:26

-

Can you detail what you meant with the "np.arctan2(u[1,0], u[0,0]) # arctan more stable than dividing"? How can I get `p_grad` if I only have the eigenvectors (and eigenvalues) from scipy's `eigh`? I did that because I do not know how to use `u,e,v` for transforming new data to be honest. – Make42 Jan 23 '21 at 13:29

-

I am also confused by the fact that you use the SVD on the (weighted) covariance matrix. I thought one would use the SVD on the original data. That why I calculated the eigenvectors of the covariance matrix instead. But I noticed that your u and v are very similar to my eigenvalue matrix: `u = v = array([[-0.17776152, 0.9840736 ], [ 0.9840736 , 0.17776152]])` while `eigenvectors = array([[-0.9840736 , -0.17776152], [-0.17776152, 0.9840736 ]])`. Not sure what is going on. – Make42 Jan 23 '21 at 13:38

-

1My f(x) in the general case is basically the strongest eigenvector (first col of u). The arctan is just a robust division to get the gradient. In the general case, the gradient is a vector itself – fr_andres Jan 23 '21 at 13:44

-

1Actually... maybe https://github.com/jakevdp/wpca is a valid alternative! – Make42 Jan 23 '21 at 15:03