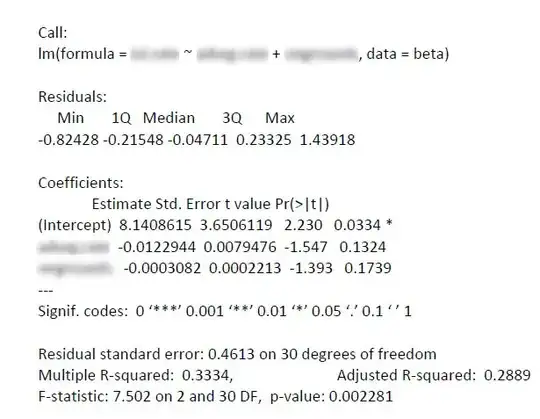

I know this question has been asked in a slightly different form here. But my question differs and because of the forum rules I can't post on that thread. I have 2 independent variables, n=32, highly significant multiple regression, but only the intercept/constant has a significant p value.

Also, I've tested for multicollinearity, it's not in the readout from R below, but the variance inflation factor for the 2 dependent variables is 2.02. Durbin-Watson, added variable plot, P-P plot, standardized residuals plot, and cook's distance are all normal. Here's the R readout - please let me know what you think.

SO: This topic is relevantly different since; what I'm searching for and still haven't found, is an actual explanation for what having a significant regression and no significant predictors means. How can this finding, if accurate, be explained? @Glen_b, I have seen that part of Jeromy's post (see my 2nd comment above) but how would I explain that in prose? Would it be accurate to say that the variables, together, have an effect on the outcome - but that their effects cannot be isolated from each other?