I was reading Andrew Ng's CS229 lecture notes (page 12) about justifying squared loss risk as a means of estimating regressions parameters.

Andres explains that we first need to assume that the target function $y^{(i)}$ can be written as:

$$ y^{(i)} = \theta^Tx^{(i)} + \epsilon^{(i)}$$

where $e^{(i)}$ is the error term that captures unmodeled effects and random noise. Further assume that this noise is distributed as $\epsilon^{(i)} \sim \mathcal{N}(0, \sigma^2)$. Thus:

$$p(e^{(i)}) = \frac{1}{\sqrt{2\pi\sigma}}exp \left( \frac{-(e^{(i)})^2}{2\sigma^2} \right) $$

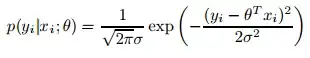

Thus we can see that the error term is a function of $y^{(i)}$, $x^{(i)}$ and $\theta$ as in:

$$e^{(i)} = f(y^{(i)}, x^{(i)}; \theta) = y^{(i)} - \theta^Tx^{(i)}$$

thus we can substitute to the above equation for $e^{(i)}$

$$p(y^{(i)} - \theta^Tx^{(i)}) = \frac{1}{\sqrt{2\pi\sigma}}exp \left( \frac{-(y^{(i)} - \theta^Tx^{(i)})^2}{2\sigma^2} \right)$$

Now we know that:

$p(e^{(i)}) = p(y^{(i)} - \theta^Tx^{(i)}) = p(f(y^{(i)}, x^{(i)}; \theta))$

Which is a function of the random variables $x^{(i)}$ and $y^{(i)}$ (and the non random variable $\theta$). Andrew then favors $x^{(i)}$ as being the conditioning variable and says:

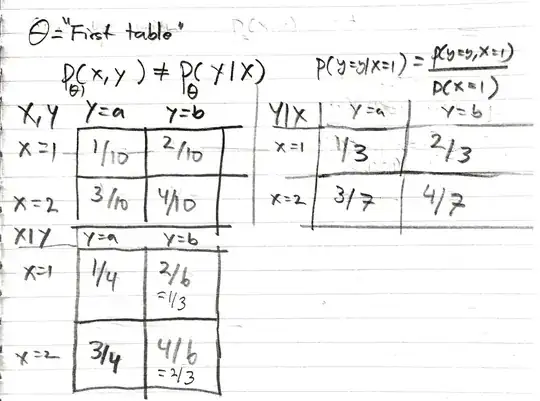

$p(e^{(i)}) = p(y^{(i)} \mid x^{(i)})$

However, I can't seem to justify why we would favor expressing $p(e^{(i)})$ as $p(y^{(i)} \mid x^{(i)})$ and not the other way round $p(x^{(i)} \mid y^{(i)})$.

The problem I have his derivation is that with only the distribution for the error (which for me, seems to be symmetric wrt to x and y):

$$\frac{1}{\sqrt{2\pi\sigma}}exp \left( \frac{-(e^{(i)})^2}{2\sigma^2} \right)$$

I can't see why we would favor $p(e^{(i)})$ as $p(y^{(i)} \mid x^{(i)})$ and not the other way round $p(x^{(i)} \mid y^{(i)})$ (just because we are interested in y, is not enough for me as a justification because just because that is our quantity of interest, it does not mean that the equation should be the way we want it to, i.e. it doesn't mean that it should be $p(y^{(i)} \mid x^{(i)})$, at least that doesn't seem to be the case from a purely mathematical perspective for me).

Another way of expressing my problem is the following:

The Normal equation seems to be symmetrical in $x^{(i)}$ and $y^{(i)}$. Why favor $p(y^{(i)} \mid x^{(i)})$ and not $p(x^{(i)} \mid y^{(i)})$. Furthermore, if its a supervised learning situation, we would get both pairs $(x^{(i)}, y^{(i)})$, right? Its not like we get one first and then the other.

Basically, I am just trying to understand why $p(y^{(i)} \mid x^{(i)})$ is correct and why $p(x^{(i)} \mid y^{(i)})$ is not the correct substitution for $p(e^{(i)})$.