I'm working with data from an instrument which is expected a priori to produce Gaussian (normally) distributed data:

\begin{equation} G = A\exp\left(-\dfrac{(x - \mu)^2}{\sigma} \right) \end{equation}

The data are normally sparse, with only about 2-3 measurements representing each $G$. In this question, I'm focussing on a single $G$, but in reality, we often have analyte signals causing overlapping $G$ that are then fitted simultaneously as described below.

To fit $G$ to our measurements, we calibrate for $\mu$ and $\sigma$ a priori using reference signals, then use these calibrations to constrain all parameters except $A$. So the fit reduces to

\begin{equation} G = AG_0 \end{equation}

which is then fitted by least-squares minimization to determine $A$.

My main question is: what is the uncertainty ($\sigma_A$) in the fitted $A$?

My initial approach was to estimate $\sigma_A$ as the RMSE of the fit. But since the RMSE is essentially the standard deviation of the residuals, this seems like an overestimate: I want the confidence interval of the fitted parameter.

Can I safely use the following textbook equation for the confidence interval of a linear-regression slope in this context? (the $x_i$ are the predictor variables, $\bar{x}$ is the mean of the predictor variables, the $e_i$ are fit residuals, and 2 degrees of freedom were consumed by the 1 fitted variable and the fact that the residuals sum to zero)

\begin{equation} \sigma_A = \dfrac{s_e}{\Sigma _i (x_i - \bar{x})^2 } = \dfrac{\frac{1}{n- 2}\Sigma_i e_i^2}{\Sigma _i (x_i - \bar{x})^2 } \end{equation}

I think "yes": all I have done is transform my $x$ before fitting a linear parameter. I also think "no" because I'm not sure of the meaning of $x_i - \bar{x}$ in this equation -- is it specific to linear regression?

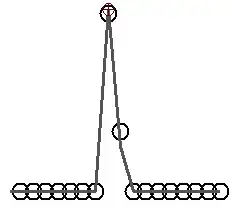

To give you a visualization, my data are almost as bad as these simulated data (but often the signal ontains one or two data points more):

Note: I am aware that Bayesian analysis would be a better method for passing information about $\mu$ and $\sigma$ to my fit, but I am not at liberty to change the analysis software right now. I need to limit myself to an estimate of $\sigma_A$.

edit: Another note that might exclude some solutions: I am analyzing thousands of measurements in bulk with no known true value.