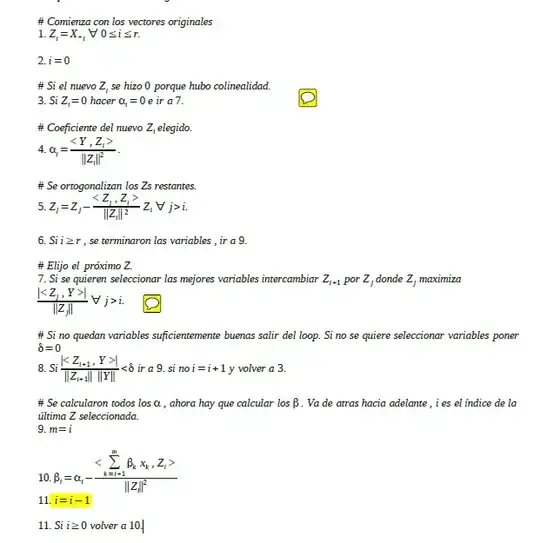

I know that orthogonalization in LS is to avoid inverting X'X. The idea behind it is to find variables Z that are orthogonal to each other. Although the process to find those is clear to me, I don't get the way to find the coefficients. The algorithm leaves $Z_1=X_1$ (I leave the first transformed variable like the original), and then calculates the coefficients in the new space of $z,\ \alpha_1=\frac{<Y,Z_1>}{{||Z_i||}^2}$ (in point 4 in the pseudo-code). Next, $Z_i=Z_i-\frac{<Z_j,Z_i>}{{||Z_i||}^2}$ (point 5 in the pseudo code). That is OK. I understand that.

The thing is that to get the $\beta$ (coefficients in the original space), (point 10 in the pseudo code):

$\beta_m=\alpha_m-\frac{\sum<\beta\cdot X,Z>}{{||Z_m||}^2}$.

And it starts with the last $Z$ , so when m=M, the $\beta_m=\alpha_m$ (because $i=m+1$ and the sum ends at $m$).

Finally when it reaches $\beta_1$, it's different from $\alpha_1$. But we were doing $Z_1=X_1$, so I don't understand why $\beta_1\ne\alpha_1$.

Can anyone give me an idea of why? Thanks! The algorithm would be (in Spanish but you can realize what it's doing):