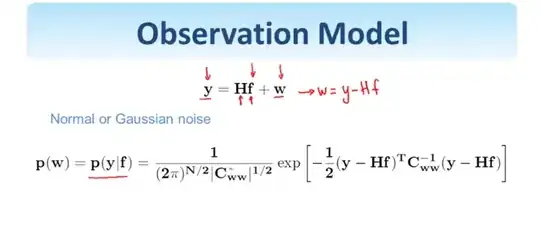

My professor has this slide up here:

Here, $y$ is an observed signal. $H$ is a deterministic transformation, which is assumed known. $f$ is the original signal (which we dont know), and $w$ is random gaussian noise. We are trying to recover $f$.

I understand everything, except for, why $p(\mathbf{w})$ = $p(\mathbf{y}|\mathbf{f})$.

That is, I understand that the multidimensional noise PDF is given by the above expression.

But why is that expression, ALSO equal to the likelihood function, $\mathbf{y}$, given $\mathbf{f}$? I'm not seeing this...