I'm fond of Unicode and what romanization attempts. My goal is to get a first latin char (for sorting purposes) of a non-latin character - so far I succeeded by transcribing Cyrillic, Greek, Hebrew, Katakana, Hiragana, Hangul (including all its syllables), Berber, Thai and Arabic letters by assigning the most appropriate starting letter to each case.

I also know that multiple systems for transliterations and transcribings (and romanization) exist - so far their differences are almost irrelevant for my needs. I'm not fond of Japanese itself - at most I might be able to recognize English terms written in Katakanas.

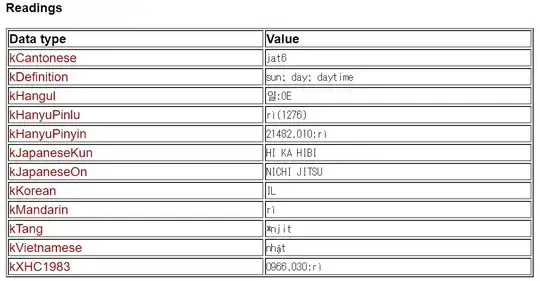

My problem is: how to assign Unicode code points U+4E00 thru U+9FFF by algorithm? For Hangul syllables this is quite easy: U+AC00 thru U+B097 => K (as all of them start with that); U+B098 thru B2E3 => N. I've looked at JS solutions like https://github.com/hexenq/kuroshiro/ and https://github.com/WaniKani/WanaKana/, but I only find the code for processing Hiraganas and Katakanas (which I already got), never Kanjis (although all of their demos succeed in processing them).

Is there a table or dictionary? If romanization of Kanjis is achieved thru first converting each Kanji into Katakanas, then how to achieve that?