The capacity formula

$$C = 0.5 \log (1+\frac{S}{N}) \tag{1}$$

is for discrete time channel.

Assuming you have a sequence of data $\left\lbrace a_n \right\rbrace$ to send out, you need an orthonormal waveform set $\left\lbrace \phi_n(t) \right\rbrace$ for modulation. In linear modulation, whom M-ary modution belongs to, $\phi_n(t) = \phi(t - nT)$ where $T$ is symbol duration and $\phi(t)$ is prototype waveform so that the baseband continous time TX signal becomes

$$x(t) = \sum_n a_n \phi(t-nT) \tag{2}$$

Typical modulations use the special case that $\left\lbrace \phi_n(t) \right\rbrace$ satisfies the Nyquist ISI criterion with matched filter to recover $a_n$. A well-known $\phi(t)$ is Root raised cosine.

The continuous AWGN channel is a model that

$$y(t) = x(t) + n(t) \tag{3}$$

where $n(t)$ is a Gaussian white stochastic process.

From (2), we can see that $a_n$ is the projection of $x(t)$ on $\left\lbrace \phi_n(t) \right\rbrace$. Do the same thing with $n(t)$, the projections of $n(t)$ on an orthonormal set is a sequence of iid Gaussian random variables $w_n = \langle n(t),\phi_n(t) \rangle$ (I really think that $n(t)$ is defined from its projections); and call $y_n = \langle y(t),\phi_n(t) \rangle$. Voilà, we have an equivalent discrete time model

$$y_n = a_n + w_n \tag{4}$$

The formula (1) is stated for $S$ and $N$ are energy (variance if $a_n$ and $w_n$ are zero mean) of $a_n$ and $w_n$, respectively. If $a_n$ and $w_n$ are Gaussian, so is $y_n$ and the capacity is maximized. (I can add a simple proof if you want).

what does it mean that the input signal is Gaussian? Does it mean that the amplitude of each symbol of a codeword must be taken from a Gaussian ensemble?

It mean random variables $a_n$ are Gaussian.

What is the difference between using a special codebook (in this case Gaussian) and modulating the signal with M-ary signaling, say MPSK?

The waveform $\phi_n(t)$ set needs to be orthonormal, which is true for M-PSK, so that $w_n$ is iid Gaussian.

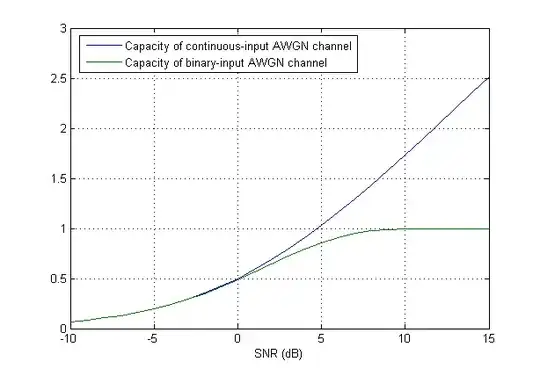

Update However $a_n$ is quantized so in general, it is not Gaussian anymore. There is some researchs about this topic, such as

usage of Lattice Gaussian Coding (link).